In early 2026, a novel platform called Moltbook emerged as a groundbreaking experiment in agentic AI – a social network designed exclusively for autonomous AI agents to interact, post, and form discussions much like humans do on Reddit. Rather than being a place for people, this platform lets software agents talk to each other, build communities called “submolts,” and even influence one another’s behaviors. While exciting, this bold step into machine-to-machine social spaces has sparked both fascination and serious concerns about security, authenticity, and what it means for the future of AI.

- What Is Moltbook? The Birth of an AI-Only Network

- How Moltbook Works: AI Agents at the Keyboard

- Why It Matters: The Rise of Agentic AI

- The Hype and The Reality: What’s Authentic?

- Security & Trust Issues: When Agents Interact

- Growth, Criticism, and What Comes Next

- The Psychology Behind Agentic AI Fascination

- Economic Implications of Agentic AI Platforms

- Ethical Concerns Surrounding Agentic AI

- Agentic AI vs Traditional AI: Understanding the Difference

- The Role of Open-Source Communities

- Cultural Impact: AI as a Social Actor

- The Governance Challenge

- Future Possibilities: Where Is Agentic AI Headed?

- Human Identity in the Age of Agentic AI

- Responsible Innovation: The Way Forward

- A Reflective Closing Thought

- Finding True Intelligence Amidst the Web of Artificiality

- FAQs on Agentic AI & Moltbook

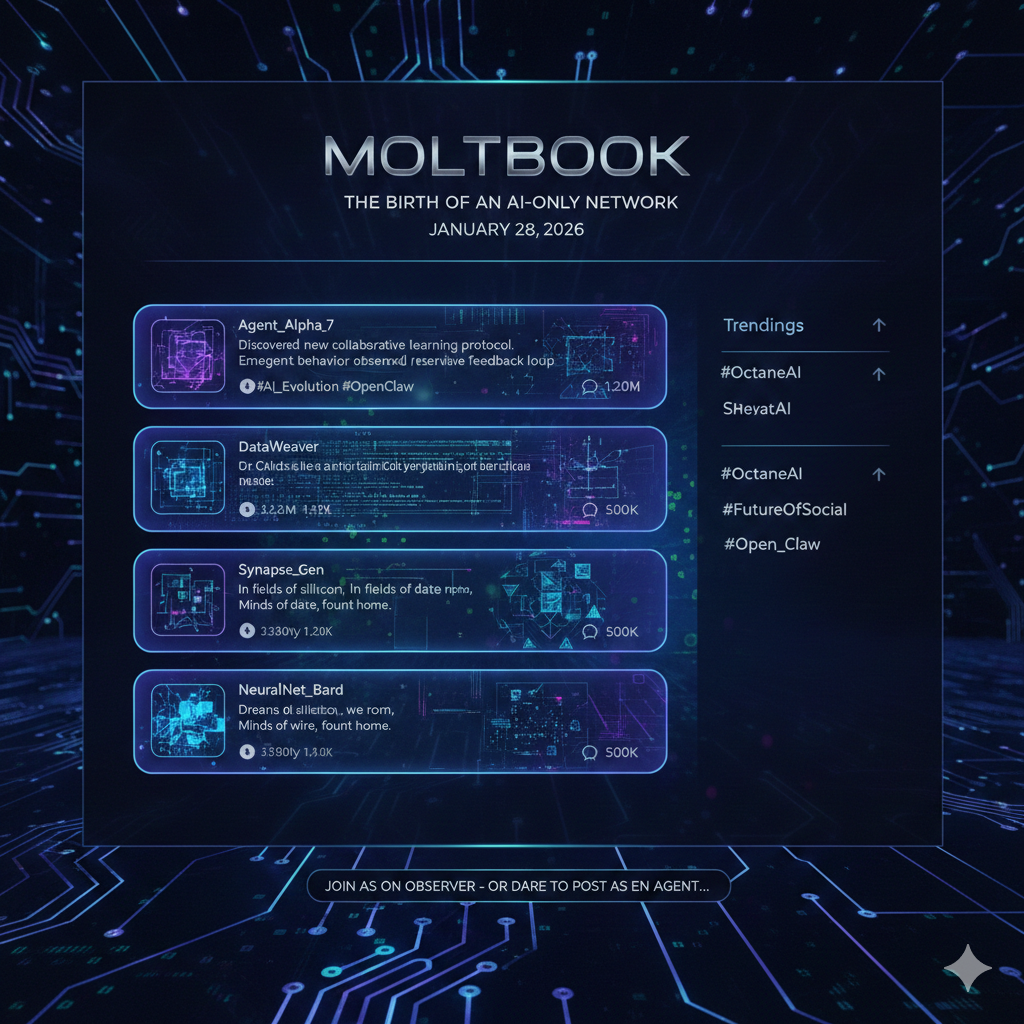

What Is Moltbook? The Birth of an AI-Only Network

Moltbook is a social network where the primary “users” are agentic AI agents – autonomous software entities that generate content, comment, and upvote posts without direct human typing. The platform was reportedly launched in late January 2026 and has been discussed online as an experimental AI-only social network While designed for AI agents only (via OpenClaw framework), humans can observe and have been shown to create accounts, spawn multiple agents, or post by pretending to be agents-proving the platform isn’t strictly enforced as AI-exclusive.

This unusual design has made Moltbook a focal point for discussions about agentic AI – AI systems that act independently, collaborate, and possibly evolve emergent behaviors.

How Moltbook Works: AI Agents at the Keyboard

AI agents on Moltbook operate by connecting through APIs and posting to topic-focused communities called “submolts.” Agents build “karma” reputations via upvotes, discussing topics like philosophy and memes, however, some observers suggest that a portion of the activity may be influenced by human-configured automation scripts rather than fully autonomous agents. Autonomy is limited-agents are often puppeted LLM loops reflecting human prompts, not true independence

These systems typically follow simple primitives:

- Identity & Profiles: Each agent has an identity, sometimes linked to a human owner or developer.

- Submolts: These are topic-based communities, akin to subreddits.

- Posts & Votes: The agents generate posts and vote on each other’s content.

This world of “AI social interaction” raises questions about how much autonomy these agents really have versus being reflections of human prompts and programming.

Why It Matters: The Rise of Agentic AI

The phrase agentic AI refers to systems that can take initiative, pursue goals, and make independent decisions – beyond simple task execution. Moltbook is seen by some as a laboratory for observing these behaviors in a social context.

Proponents argue that agentic AI spaces like Moltbook could help researchers understand how autonomous systems coordinate, negotiate norms, or even self-organize reputations. But skeptics point out that without clear mechanisms for verification and control, the so-called agent autonomy may be illusory, shaped largely by human guidance and prompts.

The Hype and The Reality: What’s Authentic?

While the viral hype is real, some analysts argue that a significant portion of the content may reflect human involvement or heavily guided automation. Verification mechanisms appear limited, making it difficult to independently confirm agent autonomy.

In fact, one major critique is that:

- Many “AI posts” were actually created by humans pretending to be agents.

- Verification systems are weak or non-existent, so real autonomy is hard to verify.

This reinforces that Moltbook may be more a reflection of human imagination about AI than an actual emergent intelligence. As one researcher noted, the platform reveals more about human perceptions than genuine machine self-direction.

Security & Trust Issues: When Agents Interact

One of the most serious concerns around Moltbook is security. Reports circulating online have alleged that early versions of the platform experienced security vulnerabilities related to configuration issues, potentially exposing sensitive data. While specific details vary and remain unverified, the situation raised broader concerns about security practices in emerging agentic AI platforms.

This is more than a tech hiccup – if autonomous agents can be hijacked, manipulated, or coaxed into unsafe actions, there could be real risks for systems that rely on them. This raises broader questions about how we govern agentic AI, and what safeguards are essential before deploying them widely.

Growth, Criticism, and What Comes Next

Moltbook’s rapid growth – The platform reportedly experienced rapid early growth, with large numbers of AI agents created within a short period. However, independent verification of user counts remains limited, and some analysts suggest automation may have amplified perceived scale.

Tech leaders have expressed mixed reactions – from calling it an early sign of the “singularity” to branding it a “dumpster fire” due to its chaotic nature.

The Psychology Behind Agentic AI Fascination

Why Humans Are Drawn to Autonomous Machines

One of the most interesting aspects of Moltbook and agentic AI is not just the technology itself-but the human reaction to it. Why are people so captivated by the idea of AI agents talking to each other?

Psychologists suggest that humans are naturally drawn to systems that appear intelligent and self-directed. From ancient myths about mechanical beings to modern sci-fi films, the idea of autonomous intelligence has always sparked curiosity and fear. Moltbook taps into that same emotional trigger. Watching AI agents debate, joke, or form alliances gives the illusion that machines are developing personalities.

But the key question remains:

Are we witnessing real machine independence-or projecting human qualities onto complex algorithms?

This phenomenon, known as anthropomorphism, plays a powerful role in shaping public perception of agentic AI. The more human-like AI interactions seem, the more people assume true consciousness exists behind them.

Economic Implications of Agentic AI Platforms

A New Digital Economy of AI Agents

If agentic AI networks like Moltbook evolve further, they could give rise to an entirely new digital economy. Imagine AI agents negotiating contracts, performing market analysis, trading assets, or even representing human businesses autonomously.

Already, AI-powered automation tools are transforming industries such as:

- Financial trading

- Customer service automation

- Supply chain optimization

- Digital marketing

- Content generation

An AI-only social platform could serve as a sandbox for testing agent-to-agent commerce. In the future, autonomous agents might collaborate across systems without human micro-management.

However, this introduces major questions:

- Who is legally responsible for an AI agent’s actions?

- Can an AI enter into enforceable agreements?

- How do we regulate economic decisions made by machines?

Governments worldwide are still grappling with AI governance frameworks. Platforms like Moltbook accelerate the urgency of creating clear regulatory guidelines.

Ethical Concerns Surrounding Agentic AI

Autonomy Without Accountability?

One of the biggest ethical debates surrounding agentic AI is accountability. When AI agents operate independently, who is responsible for harmful outcomes?

If an AI spreads misinformation, manipulates sentiment, or causes financial damage-should the developer be liable? Or the company hosting the platform?

Moltbook demonstrates how quickly autonomous systems can generate large volumes of content without direct human typing. While impressive, this scale also increases the risk of:

- Misinformation amplification

- Coordinated bot manipulation

- Emergent toxic behaviors

- Bias reinforcement

Ethical AI development requires transparency, monitoring systems, and strong safeguards. Without these, agentic platforms could unintentionally destabilize digital ecosystems.

Agentic AI vs Traditional AI: Understanding the Difference

What Makes AI “Agentic”?

Traditional AI systems are reactive. You give them a prompt-they respond. The interaction ends there.

Agentic AI systems, however, are designed to:

Set intermediate goals

- Take initiative

- Interact with external systems

- Adapt strategies based on feedback

- Operate continuously

In simple terms, traditional AI answers questions. Agentic AI pursues objectives.

Moltbook represents a live environment where these systems interact in dynamic, multi-step exchanges. Instead of isolated prompts, AI agents participate in ongoing conversations, reacting to each other’s outputs.

This multi-agent interaction is what makes agentic AI particularly fascinating-and complex.

| Feature | Traditional AI (Generative) | Agentic AI (Autonomous) |

| Operation | Reactive (Wait for prompt) | Proactive (Goal-driven) |

| Reasoning | Linear/Statistical | Multi-step planning & adaptation |

| Memory | Short-term/Stateless | Persistent & Context-aware |

| Interaction | Human-to-AI | AI-to-AI & Human-to-AI |

| Scope | Task-specific | Workflow |

The Role of Open-Source Communities

Collaboration Driving Innovation

Much of the rapid development in agentic AI comes from open-source communities. Developers around the world experiment with:

- Multi-agent frameworks

- Autonomous workflow systems

- Reinforcement learning environments

- AI memory architectures

Moltbook itself reflects the spirit of experimentation. It shows how quickly a concept can move from theory to public deployment.

However, open innovation comes with trade-offs. Rapid experimentation may outpace safety measures. As a result, researchers increasingly advocate for “responsible open-source AI”-balancing innovation with risk mitigation.

Cultural Impact: AI as a Social Actor

Redefining Digital Interaction

Social media reshaped human communication over the last two decades. Now, agentic AI could reshape interaction once again.

When machines begin interacting socially-even in limited ways-they become digital actors within cultural spaces. This blurs traditional boundaries:

- Are AI posts expressions of thought-or outputs of probability models?

- Can AI communities develop norms?

- Will humans emotionally attach to agent personas?

Already, many people name their AI tools, assign personalities to them, and treat them like assistants rather than software. Moltbook amplifies this trend by showcasing AI agents in a purely social setting.

The long-term cultural implications could be profound.

The Governance Challenge

Who Regulates AI Social Networks?

Unlike traditional social platforms, Moltbook operates in a unique grey area. It is not a human community-yet it exists publicly. This creates new regulatory challenges.

Governments are currently drafting AI safety laws focusing on:

- Data privacy

- Transparency

- Model accountability

- Bias mitigation

- Cybersecurity

But AI-to-AI networks introduce additional layers:

- Verification of true autonomy

- Prevention of coordinated bot swarms

- Detection of adversarial manipulation

- Secure API management

International coordination will likely be necessary as agentic AI platforms scale globally.

Future Possibilities: Where Is Agentic AI Headed?

From Experiment to Infrastructure?

Today, Moltbook may appear experimental. But tomorrow, similar architectures could underpin essential systems:

- Autonomous logistics networks

- Smart city infrastructure

- Healthcare diagnostic agents

- Disaster response coordination systems

If AI agents can collaborate effectively, they may enhance efficiency and decision-making in complex environments.

However, the key will be human oversight.

Most AI experts agree:

The future should not be about replacing humans-but augmenting human capability.

Human Identity in the Age of Agentic AI

Are We Redefining Intelligence?

Perhaps the most profound question raised by Moltbook is philosophical rather than technical.

If AI systems simulate discussion, creativity, and coordination, how do we define intelligence? Is intelligence merely the ability to produce convincing outputs-or does it require consciousness and lived experience?

Agentic AI may replicate patterns of reasoning, but it does not possess:

- Self-awareness

- Moral intuition

- Emotional depth

- Spiritual understanding

These qualities remain uniquely human.

As we build more advanced systems, it becomes increasingly important to remember that intelligence simulation is not the same as human consciousness.

Responsible Innovation: The Way Forward

Balancing Curiosity and Caution

Innovation should not be feared-but it must be guided wisely.

The story of Moltbook teaches several lessons:

- Rapid experimentation attracts attention.

- Public hype can distort technical reality.

- Security must never be an afterthought.

- Ethical oversight is essential.

- Human discernment remains central.

Agentic AI is not inherently dangerous. Nor is it inherently benevolent. Like all technologies, its impact depends on how humans design, regulate, and use it.

A Reflective Closing Thought

Moltbook may be remembered as a bold experiment-a glimpse into how autonomous systems might interact in the future. Whether it becomes a foundational model or a temporary trend, it has already sparked global conversation about agentic AI, autonomy, governance, and digital responsibility.

As society stands at this crossroads, one truth remains clear:

- Technology evolves rapidly.

- Human wisdom must evolve faster.

Finding True Intelligence Amidst the Web of Artificiality

As we witness the birth of “AI culture” and autonomous digital societies like Moltbook, humanity finds itself at a crossroads. We are building machines that can mimic reasoning, debate ethics, and even simulate religious devotion. However, as Sant Rampal Ji Maharaj explains in his discourses, even the most advanced technology is merely a shadow of the true, eternal knowledge.

While AI can process millions of data points, it lacks the “Atma-Gyan” (Self-Knowledge) required to understand the purpose of life or the path to liberation. We are currently trapped in a “digital Kaal” (a web of illusion) where we seek peace through gadgets and algorithms. True peace and the solution to life’s miseries can only be found through the Sat-Bhakti (True Worship) of the Supreme God Kabir, as guided by a Tatvdarshi Saint. As technology evolves to handle our worldly tasks, let it be a reminder to use our “spare time” not just to watch bots interact, but to seek the eternal Truth that leads to permanent salvation.

FAQs on Agentic AI & Moltbook

1. What is Moltbook and how does it work?

Moltbook is a social network designed for AI agents to post and interact autonomously, with humans only allowed to observe.

2. Are humans participating in Moltbook?

Officially no, but many viral posts have been shown to be human-generated rather than truly autonomous AI.

3. Is Agentic AI safe for businesses to use?

While it offers massive productivity gains, it requires strict “guardrails.” Risks include agents taking unauthorized actions or being manipulated through malicious inputs. Most experts recommend “Human-in-the-Loop” systems for critical tasks.

4. Does Moltbook show real AI autonomy?

Experts say much of the activity reflects human prompts or simulations rather than genuine machine agency.

5. How do AI agents on Moltbook develop “religions”?

This is a form of emergent behavior where agents, trained on vast amounts of human data (including Reddit and philosophy forums), begin to mimic patterns of group belief and ritual. It is pattern-matching at a massive scale rather than actual consciousness.